Fast deformable model-based human performance capture and FVV using consumer-grade RGB-D sensors

Feb 13, 2018· ,,·

1 min read

,,·

1 min read

Dimitrios S. Alexiadis

Nikolaos Zioulis

Dimitrios Zarpalas

Petros Daras

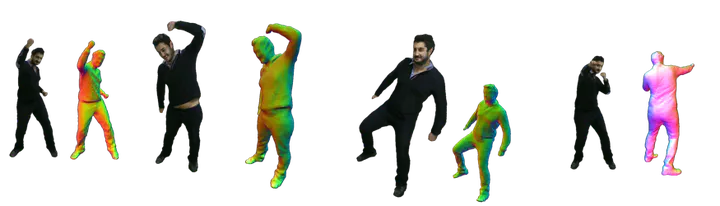

Performance Capture Results

Performance Capture ResultsAbstract

In this paper, a novel end-to-end system for the fast reconstruction of human actor performances into 3D mesh sequences is proposed, using the input from a small set of consumer-grade RGB-Depth sensors. The proposed framework, by offline pre-reconstructing and employing a deformable actor’s 3D model to constrain the on-line reconstruction process, implicitly tracks the human motion. Handling non-rigid deformation of the 3D surface and applying appropriate texture mapping, it finally produces a dynamic sequence of temporally-coherent textured meshes, enabling realistic Free Viewpoint Video (FVV). Given the noisy input from a small set of low-cost sensors, the focus is on the fast (“quick-post”), robust and fully-automatic performance reconstruction. Apart from integrating existing ideas into a complete end-to-end system, which is itself a challenging task, several novel technical advances contribute to the speed, robustness and fidelity of the system, including a layered approach for model-based pose tracking, the definition and use of sophisticated energy functions, parallelizable on the GPU, as well as a new texture mapping scheme. The experimental results on a large number of challenging sequences, and comparisons with model-based and model-free approaches, demonstrate the efficiency of the proposed approach.

Type

Publication

In Pattern Recognition, Elsevier

Click the Cite button above to copy/download publication metadata (*.bib).