Spherical View Synthesis for Self‑Supervised 360 Depth Estimation

Sep 16, 2019· ,,,,·

1 min read

,,,,·

1 min read

Nikolaos Zioulis

Antonis Karakottas

Dimitrios Zarpalas

Federico Alvarez

Petros Daras

Trinocular Spherical Stereo

Trinocular Spherical StereoAbstract

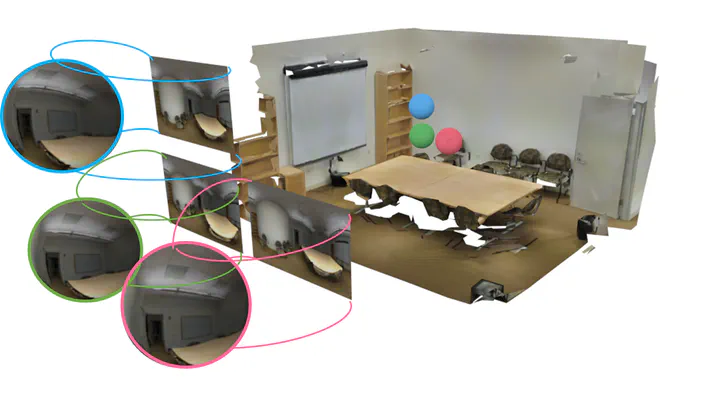

Learning based approaches for depth perception are limited by the availability of clean training data. This has led to the utilization of view synthesis as an indirect objective for learning depth estimation using efficient data acquisition procedures. Nonetheless, most research focuses on pinhole based monocular vision, with scarce works presenting results for omnidirectional input. In this work, we explore spherical view synthesis for learning monocular 360 depth in a self-supervised manner and demonstrate its feasibility. Under a purely geometrically derived formulation we present results for horizontal and vertical baselines, as well as for the trinocular case. Further, we show how to better exploit the expressiveness of traditional CNNs when applied to the equirectangular domain in an efficient manner. Finally, given the availability of ground truth depth data, our work is uniquely positioned to compare view synthesis against direct supervision in a consistent and fair manner. The results indicate that alternative research directions might be better suited to enable higher quality depth perception. Our data, models and code are publicly available at

.

Type

Publication

In 2019 International Conference on 3D Vision (3DV)

Click the Cite button above to copy/download publication metadata (*.bib).