Single‑shot cuboids: Geodesics‑based end‑to‑end Manhattan aligned layout estimationfrom spherical panoramas

Mar 18, 2021· ,,,·

1 min read

,,,·

1 min read

Nikolaos Zioulis

Federico Alvarez

Dimitrios Zarpalas

Petros Daras

End-to-end Manhattan Aligned Layout Estimation

End-to-end Manhattan Aligned Layout EstimationAbstract

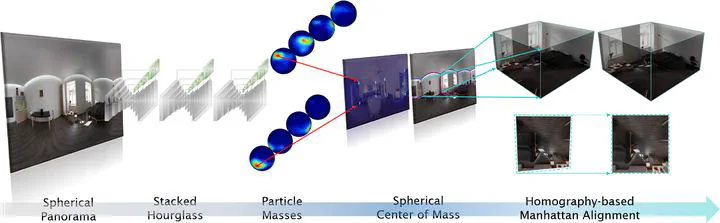

It has been shown that global scene understanding tasks like layout estimation can benefit from wider field of views, and specifically spherical panoramas. While much progress has been made recently, all previous approaches rely on intermediate representations and postprocessing to produce Manhattan-aligned estimates. In this work we show how to estimate full room layouts in a single-shot, eliminating the need for postprocessing. Our work is the first to directly infer Manhattan-aligned outputs. To achieve this, our data-driven model exploits direct coordinate regression and is supervised end-to-end. As a result, we can explicitly add quasi-Manhattan constraints, which set the necessary conditions for a homography-based Manhattan alignment module. Finally, we introduce the geodesic heatmaps and loss and a boundary-aware center of mass calculation that facilitate higher quality keypoint estimation in the spherical domain. Our models and code are publicly available at

.

Type

Publication

In Image and Vision Computing, Elsevier

Click the Cite button above to copy/download publication metadata (*.bib).