Towards Practical Single-shot Motion Synthesis

Jun 3, 2024·, ·

1 min read

·

1 min read

Konstantinos Roditakis

Spyridon Thermos

Nikolaos Zioulis

GANimator++

GANimator++Abstract

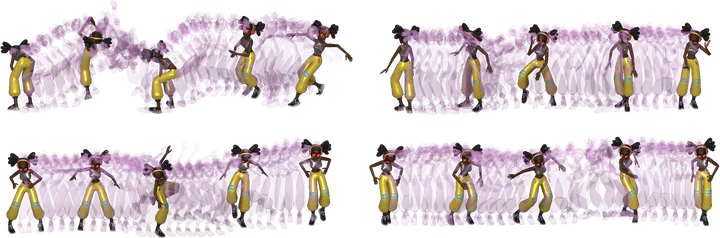

Despite the recent advances in the so-called “cold start” generation from text prompts, their needs in data and computing resources, as well as the ambiguities around intellectual property and privacy concerns pose certain counterarguments for their utility. An interesting and relatively unexplored alternative has been the introduction of unconditional synthesis from a single sample, which has led to interesting generative applications. In this paper we focus on single-shot motion generation and more specifically on accelerating the training time of a Generative Adversarial Network (GAN). In particular, we tackle the challenge of GAN’s equilibrium collapse when using mini-batch training by carefully annealing the weights of the loss functions that prevent mode collapse. Additionally, we perform statistical analysis in the generator and discriminator models to identify correlations between training stages and enable transfer learning. Our improved GAN achieves competitive quality and diversity on the Mixamo benchmark when compared to the original GAN architecture and a single-shot diffusion model, while being up to ×6.8 faster in training time from the former and ×1.75 from the latter. Finally, we demonstrate the ability of our improved GAN to mix and compose motion with a single forward pass.

Type

Publication

In AI for 3D Generation Workshop, Computer Vision and Pattern Recognition Workshop

Click the Cite button above to copy/download publication metadata (*.bib).